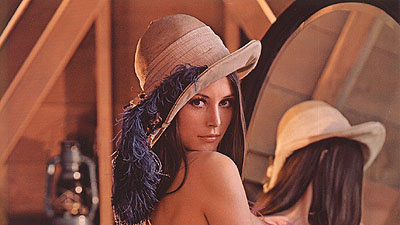

What does remove part of an image mean? mathematically and programmatically, most of the times, it means convert the array for corresponding area of the image to be

(0,0,0) for the RGB channel value, or a black color.

Removing part of an image is useful in many situations. For example, in the text to image application, using Dallie2,

we can edit part of the image with inpainting.

Image processing using Python Image Library

from PIL import Image |

|

(225, 400, 3)

array([[[108, 73, 53],

[109, 72, 54],

[109, 67, 51],

...,

[ 56, 41, 44],

[ 62, 47, 50],

[ 70, 54, 57]],

[[110, 75, 55],

[110, 73, 55],

[109, 67, 51],

...,

[ 54, 39, 42],

[ 57, 42, 45],

[ 64, 48, 51]],

[[109, 74, 55],

[110, 73, 55],

[107, 68, 53],

...,

[ 51, 39, 41],

[ 56, 41, 44],

[ 61, 46, 49]],

...,

[[130, 75, 54],

[128, 74, 50],

[130, 78, 56],

...,

[123, 75, 61],

[122, 74, 60],

[128, 80, 66]],

[[129, 72, 52],

[127, 70, 50],

[131, 77, 53],

...,

[126, 78, 64],

[122, 74, 60],

[126, 78, 64]],

[[134, 72, 49],

[133, 72, 51],

[126, 71, 51],

...,

[140, 87, 71],

[135, 79, 64],

[140, 84, 67]]], dtype=uint8)

from the the above results, we can see that, the image has 225 (y direction) times 400 (x direction) pixels; At each pixel, there is a tuple of size 3 represents the RGB color.

One thing to be careful is the channel difference between RGB and RGBA. If you take a snapshot of the screen. The pixels inside the bounding box are returned as an “RGB” image on Windows or “RGBA” on macOS. For RGB channel, it needs 24 bits to encode the pixel, while for RGBA, it needs 32 bits to encode the pixel.

If the original image has RGB channel, but we need RGBA channel, we can use the following function to convert the image from RGB channel to RGBA channel, or vice versa.

img_rgba = Image.open("lena.jpg").convert('RGBA') |

(225, 400, 4)

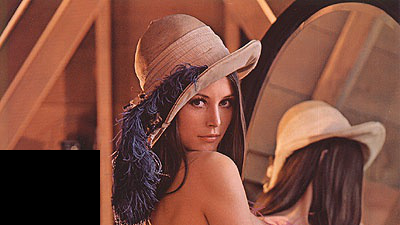

Let us get back to our task of removing parts of the image as the following

|

Now you can see the left corner of the image is black now.